Organizations utilize artificial intelligence (AI) and machine learning (ML) to increase visibility into customer behavior, operational efficiencies, and many other business pain points. These trends are forcing companies to make large investments in data science technology and teams to build and train analytical models. Businesses are benefiting from these investments, but the greater value can be realized when AI/ML practices are operationalized.

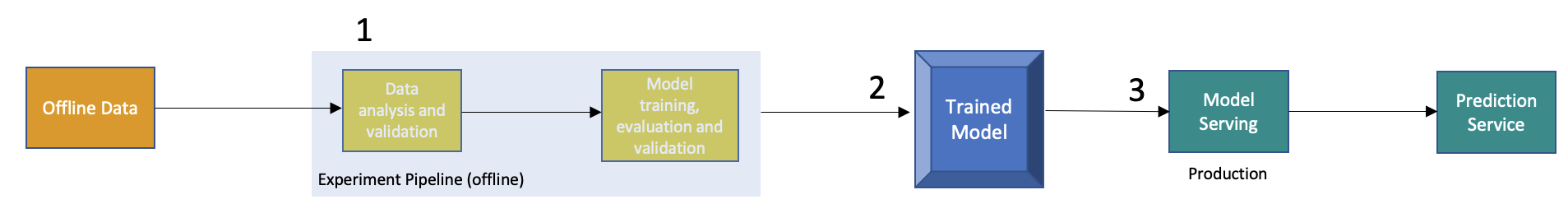

Most enterprises today are doing ML tasks that are manual and script driven. The diagram below is a typical example of a manual model:

However, this process is laden with a number of challenges and constraints:

Data is manually delivered into and out of the models.

Anytime models change, IT assistance is required.

Data scientists consume offline data (data at rest) for modeling. There is no automated data pipeline to quickly deliver data in real-time and in the right format.

Because data modeling lacks a CI/CD pipeline, all tests and validations are handled manually as a part of scripts or notebooks. This leads to coding errors and incorrect models, causing degradation in predictive services. This creates problems in compliance, false positives, and loss in revenue.

Manual processes lack active performance monitoring. For example, what if the model isn't working correctly in production and you'd like to understand how and why?

In addition, manual model processing lacks the ability to make comparisons using particular metrics of your current production model (e.g., A/B testing).

Traditionally, developing, deploying, and continuously improving a ML application has been complex, forcing many organizations to gloss over and miss the benefits of automating data pipelines.

To tackle the above challenges, a ML operations pipeline (MLOps) is needed. The below example outlines what a typical MLOps pipeline looks like:

1. Data Scientists consume offline data for data analysis, modeling, and validation.

2. Once the model is pushed through the pipeline deployment, it is passed onto the automated pipeline. If there is any new data, it will be extracted and transformed for the model training and validation.

3. Once a model is trained or is better than the one already serving in production, then the trained model is pushed to a repository.

4. The trained model is then picked up and deployed in production.

5. The prediction service emits labels. This service could have multiple consumers.

6. A performance monitoring service consumes this data and checks for any performance degradation.

7. If there's a new stream of data coming in, then it will send data through an ETL pipeline that will eventually be used by data scientists. It will also call the trigger service.

8. The trigger service can be scheduled depending on the use case. We’ve seen the following use cases in enterprises:

When there is a performance degradation in the model.

When there is new incoming data.

Many large-scale businesses have been successful using automated MLOps pipelines, including Lightbend customers HPE and Capital One.

Hewlett-Packard Enterprise (HPE) has implemented automated MLOps pipelines for predictive analysis with IOT. They have a product called InfoSight that is consuming trillions of metrics from billions of global sensors. Predictive analysis is being done on the data and is served in real-time. Automated MLOps pipelines deliver real-time analysis while scaling to traffic and keeping the compute resources at a minimum.

Capital One uses automated MLOps pipelines for real-time decision making around auto loans. They are running 12 ML models in production. Before these models were implemented, processes were taking close to 55 hours to complete, but now they are able to compute the same amount of processes in just a few seconds.

Akka Data Pipelines has emerged as a leading technology framework to quickly construct automated MLOps pipelines. Using the stream processing engine of your choice, this framework provides the tools that remove the headache and time associated with deploying and managing automated MLOps pipelines. This frees teams to focus on developing business logic and applying ML models that infuse intelligence into data for real-time decisioning.

Even better, Akka Platform, a set of building blocks for rapidly building, testing, and deploying Reactive microservices, compliments Akka Data Pipelines for a comprehensive solution that further accelerates developer productivity, time-to-market, and bottom-line growth.

Akka Data Pipelines is built to get the most out of your application architecture by not only reducing significant IT and ML efforts, but also reducing costs by using compute resources efficiently.

To learn more about how Akka Data Pipelines works and the business value it can bring to your company, check out our latest on-demand webinar, Machine Learning in Action: Applying ML to Real-Time Streams of Data.